The default file doesn’t come with a ServerName directive so we’ll have to add and define it by adding this line below the last directive: ServerName We should have our email in ServerAdmin so users can reach you in case Apache experiences any error: ServerAdmin also want the DocumentRoot directive to point to the directory our site files are hosted on: DocumentRoot /var/www/gci/ Now edit the configuration file: sudo nano gci.conf ( gci.conf is used here to match our subdomain name): sudo cp nf gci.conf Since Apache came with a default VirtualHost file, let’s use that as a base. We start this step by going into the configuration files directory: cd /etc/apache2/sites-available/ Setting up the VirtualHost Configuration File Now let’s create a VirtualHost file so it’ll show up when we type in. I'm running this website on an Ubuntu Server server!

#Install apache spark on ubuntu code#

Paste the following code in the index.html file: Let’s go into our newly created directory and create one by typing: cd /var/www/gci/ Now that we have a directory created for our site, lets have an HTML file in it. We have it named gci here but any name will work, as long as we point to it in the virtual hosts configuration file later. So let’s start by creating a folder for our new website in /var/www/ by running sudo mkdir /var/www/gci/ Today, we’re going to leave the default Apache virtual host configuration pointing to and set up our own at.

We can modify its content in /var/We can modify how Apache handles incoming requests and have multiple sites running on the same server by editing its Virtual Hosts file. Creating Your Own Websiteīy default, Apache comes with a basic site (the one that we saw in the previous step) enabled.

#Install apache spark on ubuntu download#

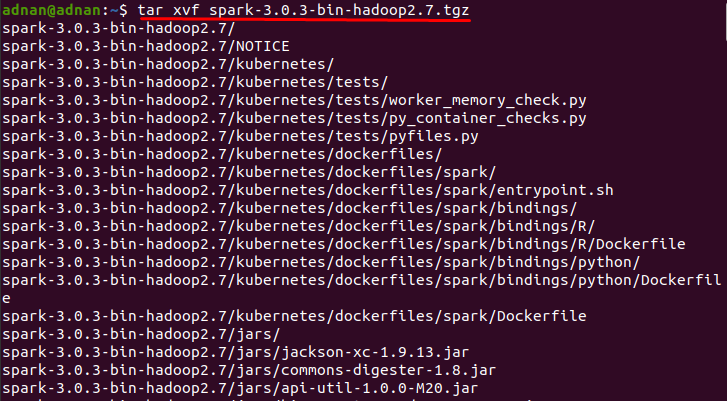

Alternatively, you can use the wget command to download the file directly in the terminal. 3.1.2) at the time of writing this article.

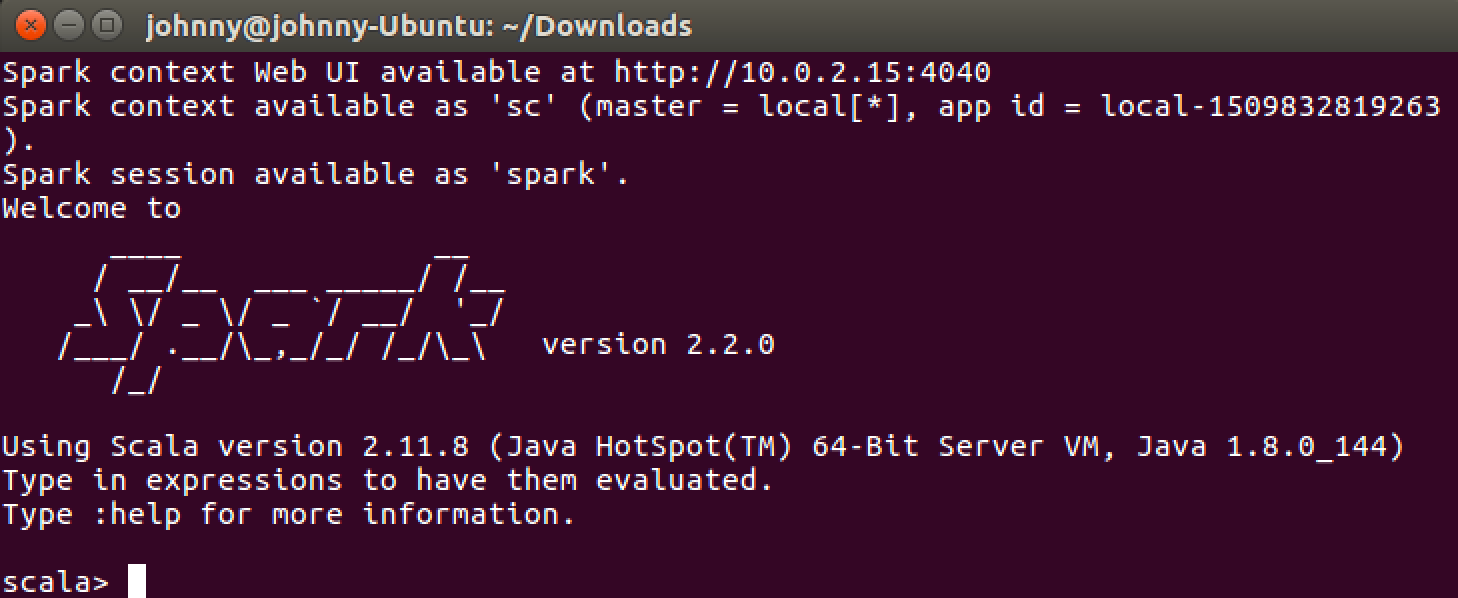

To find out more about sparkR, check out the documentation here. Now go to the official Apache Spark download page and grab the latest version (i.e. This is a light-weight interface to Spark from R.

0 kommentar(er)

0 kommentar(er)